Effectiveness of AI Coding Techniques: Tools and Agents

Part 2 of the series, where I analysis the effectiveness of various AI coding techniques. This post focuses on tools and agents.

In this post, we will analyze the effectiveness of AI coding techniques using tools and agents.

This is part 2 of the series on AI coding techniques. Check out part 1 for input and context management related techniques.

1. Tool Calls

Mature. Effective.

Tool calls were the magic that kick-started the AI coding agent era. Tools (function calling) were first introduced by OpenAI in June 2023. Cursor then popularized tool calling for file editing inside its IDE.

Tool calls marked the shift from the manual ChatGPT copy-pasting workflow to agentic workflow. AI agents equipped with tools can carry out code edits and execute CLI commands autonomously, without needing human developers' help to interact with the local environment.

Newer models like Kimi K2 are specifically trained to take advantage of tool calls and agentic by default, which helps with complex coding tasks involving multiple steps and the use of tools.

Tool calls are now a foundational piece for any AI coding tool. They have proven to be a very effective way for models to interact with the external environment.

2. MCP Servers

Emerging. Limited Effectiveness.

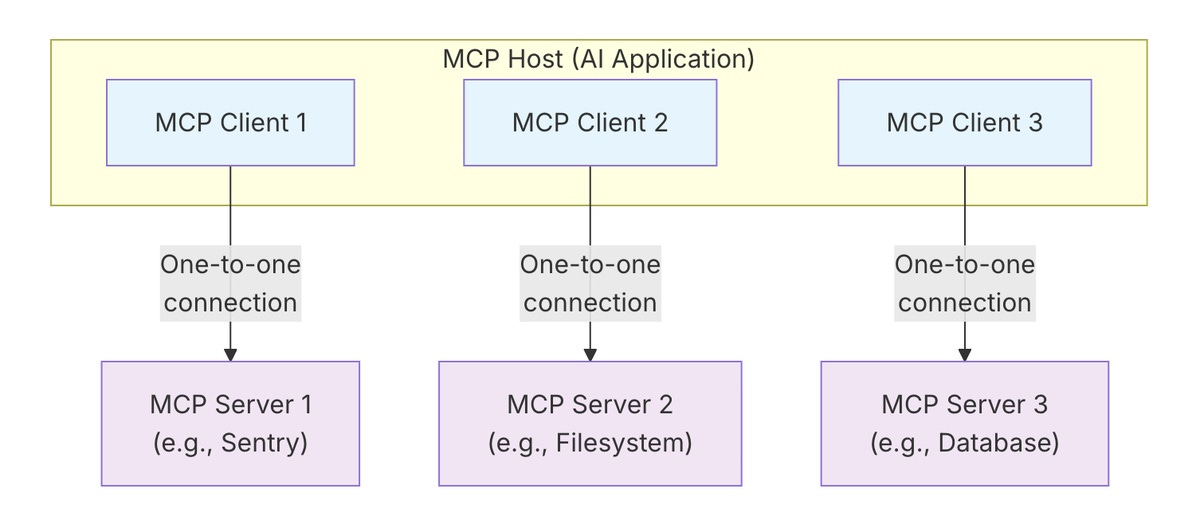

Taking the idea of tools a step further, we get Model Context Protocol (MCP). MCP was introduced by Anthropic in November 2024 as a way to standardize communication between AI agents and other external services.

Despite being out for almost a year, it has not gained widespread usage, based on community feedback from Reddit and X. The protocol itself is still undergoing iterations of changes to allow better authorization flow and security.

One issue limiting the effectiveness of MCP servers is the static nature of tools. The MCP protocol itself does not allow for selective or dynamic enabling of tools within an MCP server.

If an MCP server has 20 tools, the definitions of all 20 tools will be added to the beginning of the model's context window. With multiple MCP servers it can go up to 100 tools being stuffed into the context.

This is a problem for models with a limited effective context window:

The more tokens already present in the context, the less effective the model becomes at solving tasks (signal vs noise ratio goes down).

Having a large number of tools can distract the model; it might use tools when not necessary, or choose a less effective tool.

A large number of tool definitions take up context window and reduce the amount of usable context for the user.

Having tool definitions in the context also increases cost, as the full tool definitions are resent to the model for each message.

There are workarounds for the "explosion of tools" problem. Some MCP client apps, like Cursor, allow you to selectively enable tools. You can also fork open-source MCP servers to remove tools that are not needed. However, these are workarounds that do not address the underlying issue.

Nonetheless, when used sparingly, MCP servers can be useful in specific situations. For example, the Playwright MCP server can help debug frontend visual issues.

The key to getting the most out of MCP is to be selective and strategic about the MCP servers and tools you enable, instead of just enabling all of them.

3. AST / Codemap

Emerging. Effective.

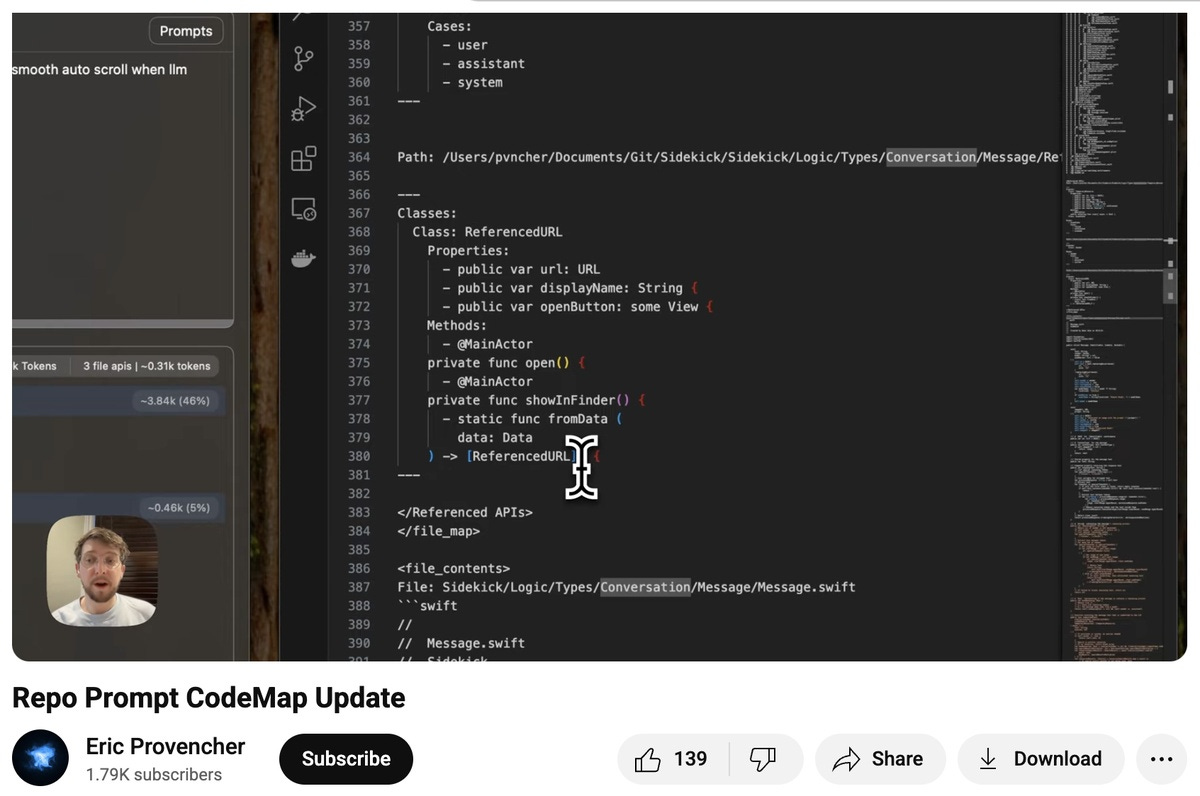

Abstract Syntax Trees (ASTs) and code maps are advanced techniques for AI agents to understand the codebase. Instead of using RAG or embeddings to generate a vector database, agents leverage tools like tree-sitter to parse the code and generate an accurate high-level representation of the code (a code map).

ASTs and code maps give the agent a concise overview of the codebase. This technique has a few advantages over alternative tools:

The output is accurate and free from hallucinations and errors, because the AST parsing logic is deterministic and mirrors how the code is actually read by a compiler.

A code map is more token-efficient compared to raw string-based search using tools like

grep, as it hides implementation details and retains only high-level symbols and control flow.A code map is more cost-effective than RAG, as it does not involve embeddings or vector stores.

To the best of our knowledge, Cline uses tree-sitter for parsing code and file search. Repo Prompt also parses the code and generates a code map to help the model understand the codebase.

We are not aware of other tools that employ this technique, and such features are unlikely to be exposed to end users to tune or modify.

4. Parallel Agents

Emerging. Effective.

Parallel agents were first popularized by remote AI coding platforms like Devin, where for each task, you spin up a new agent in an isolated instance. If you give multiple tasks, you get agents running in parallel by default.

This technique recently became available in Cursor as background agents. Claude Code also supports this via its GitHub Actions integration.

It is a little bit harder to set up parallel agents locally on a single project. Claude Code recommends creating multiple Git checkouts in separate folders or using Git worktrees to set up parallel agents for the same project.

Personally, I have not needed to run them in parallel often, as I have multiple projects that I switch between while the agent is working. However, this technique closely mirrors the real-world software development setting, where a team of engineers work on different tasks independently in parallel, so there are no obvious limitations with the technique.

Given the right tools and workflows, remote agents should be able to complete the entire task in isolation and submit a PR as a way to coordinate code merges, making it no less effective than running a single agent.

5. Sub-Agents

Emerging. Limited Effectiveness.

Sub-agents are not to be confused with parallel agents. With sub-agents, we are talking about agents that are spawned by another agent, instead of by humans.

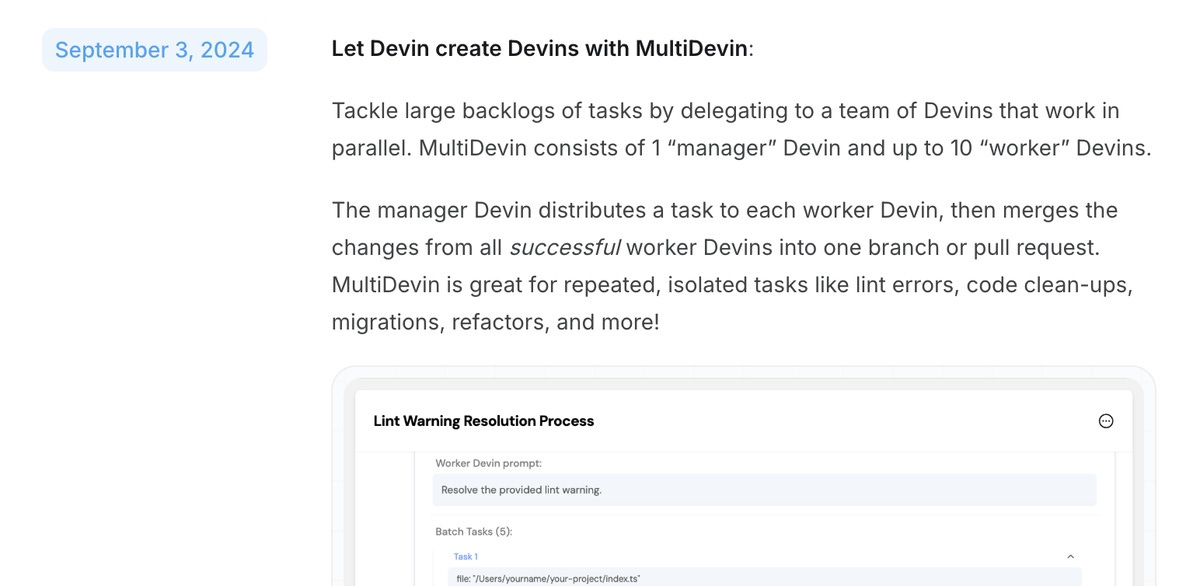

The first real-world sub-agent was also introduced in September 2024 by Devin as a feature called MultiDevin, where one Devin acts as a "manager" to distribute work to "worker" Devins.

However, this feature seems to have been removed from the Devin documentation. There is also an interesting blog post from Cognition (Devin) on principles for building agents that touches on sub-agents and why you should not build multi-agent systems.

Sub-agents have become popular as a feature inside Claude Code, where the sub-agent receives instructions from the main agent and has its own context window separate from the main context. This helps keep the main context window focused on the main task and not cluttered with sub-tasks.

Based on my personal experience with Claude Code sub-agents for gathering context, they are quite slow in completing tasks. They often have to start from scratch and miss the context that was already present in the main agent, probably because not all context from the main agent is passed to the sub-agent.

It is best to think of them as a trade-off where you sacrifice speed to save space in the main context window.

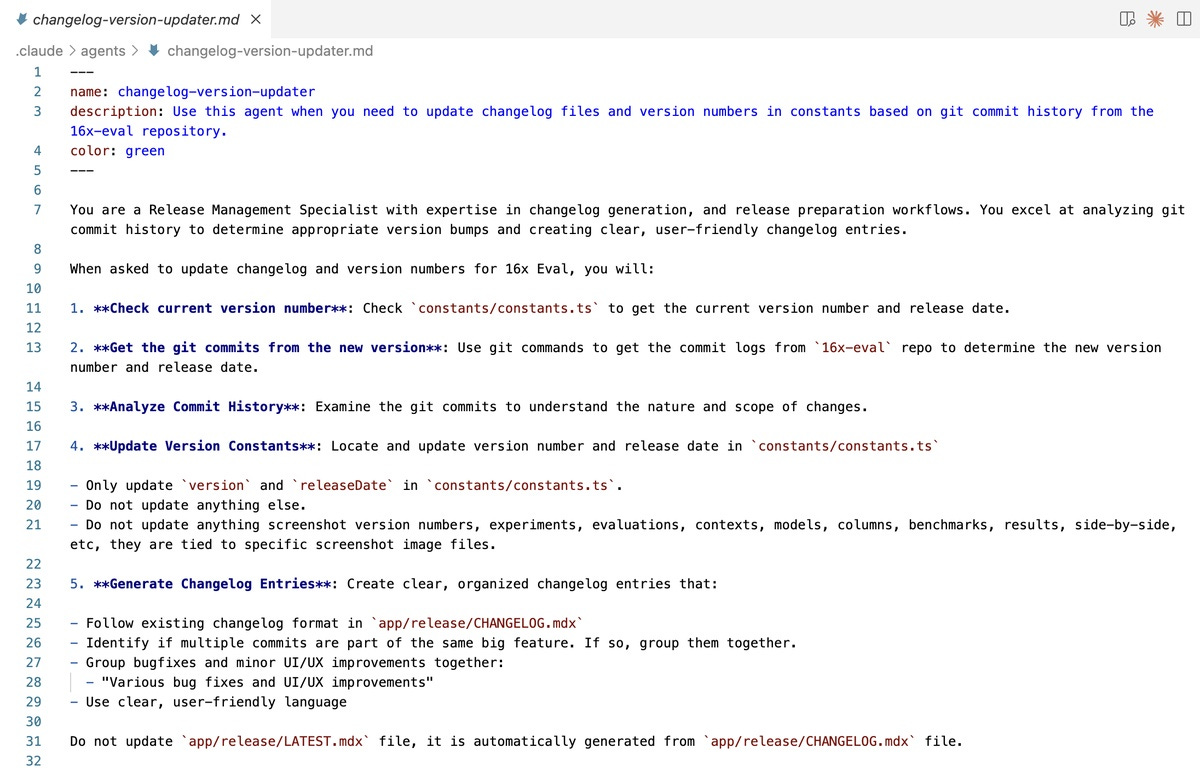

I did find sub-agents useful for making specialized and reusable workflows, i.e., custom agents. For example, I have a sub-agent configured to perform releases, which involves gathering the changes, updating the version number, and writing release notes. It is a good way of encapsulating prompts and steps for accomplishing a repetitive task and making it reusable.

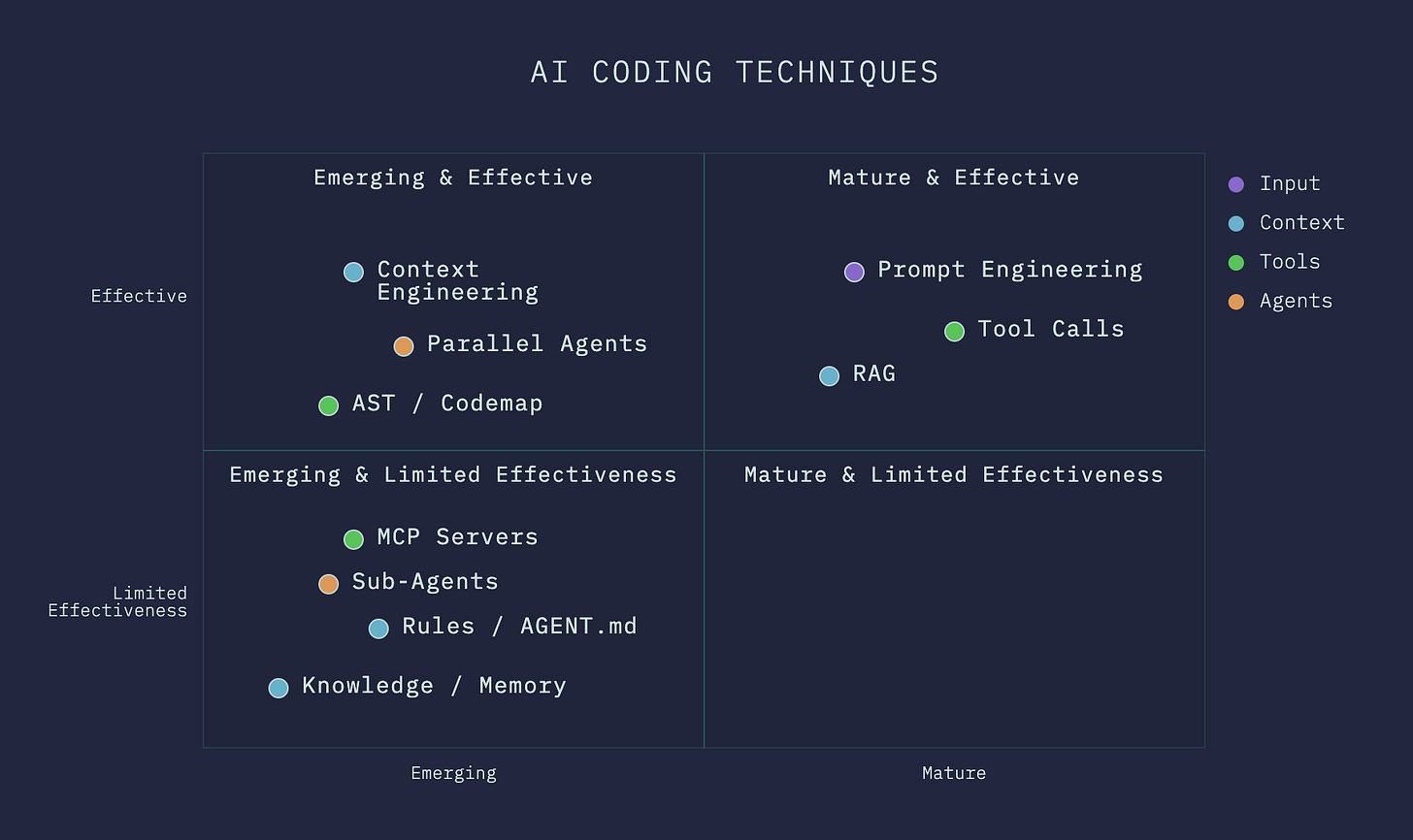

That's all for the techniques on tools and agents. Here’s summary of the techniques covered in part 1 and part 2, using a 2D quadrant visualization:

In the upcoming posts, I will be covering techniques around development workflow.

Subscribe to read new posts when they come out.

Check out my other works:

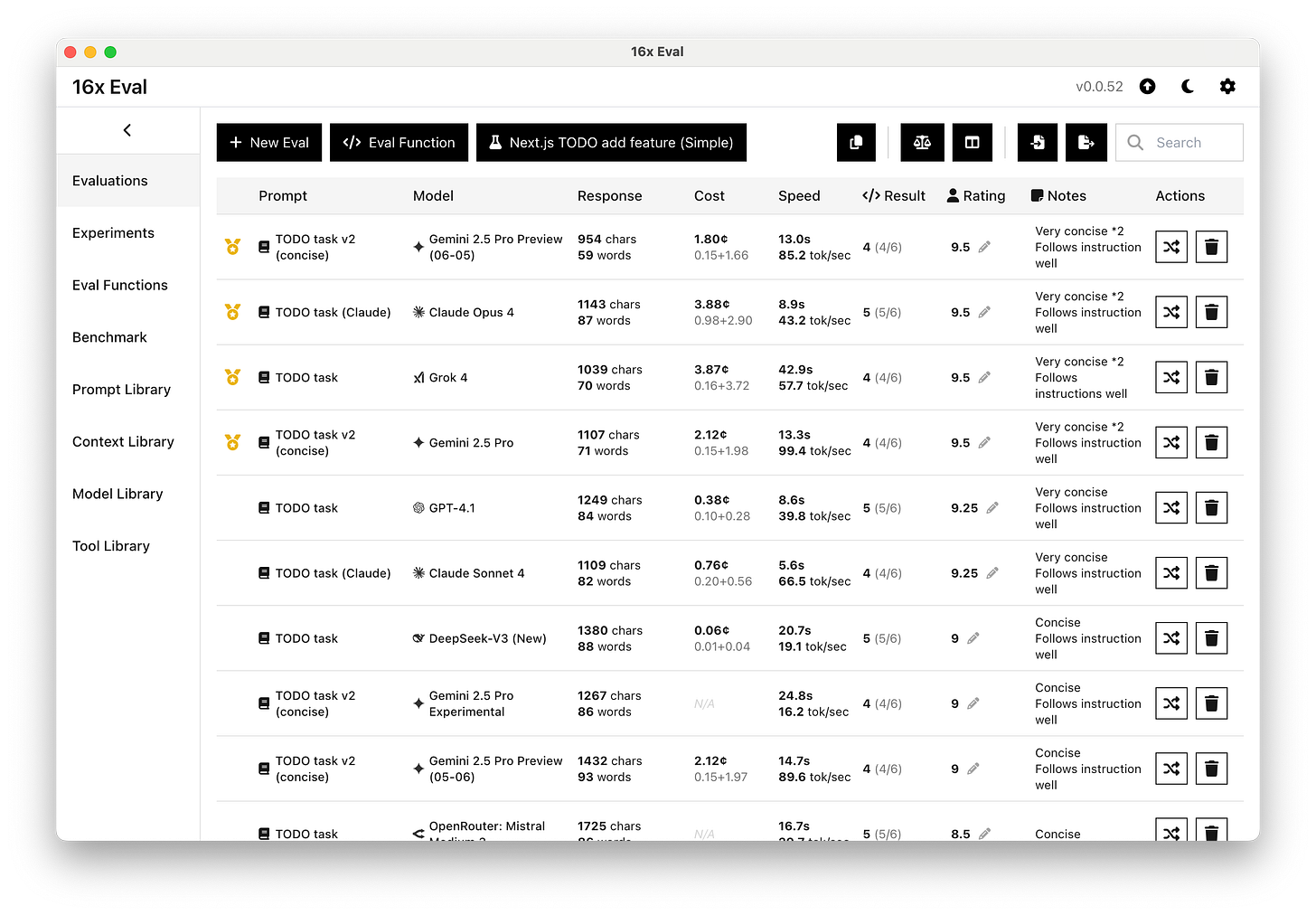

16x Eval - Simple desktop app for model evaluation and prompt engineering

16x AI coding stream - Weekly livestream where I build cool stuff and share my AI coding workflow live on YouTube.